Compute Metrics

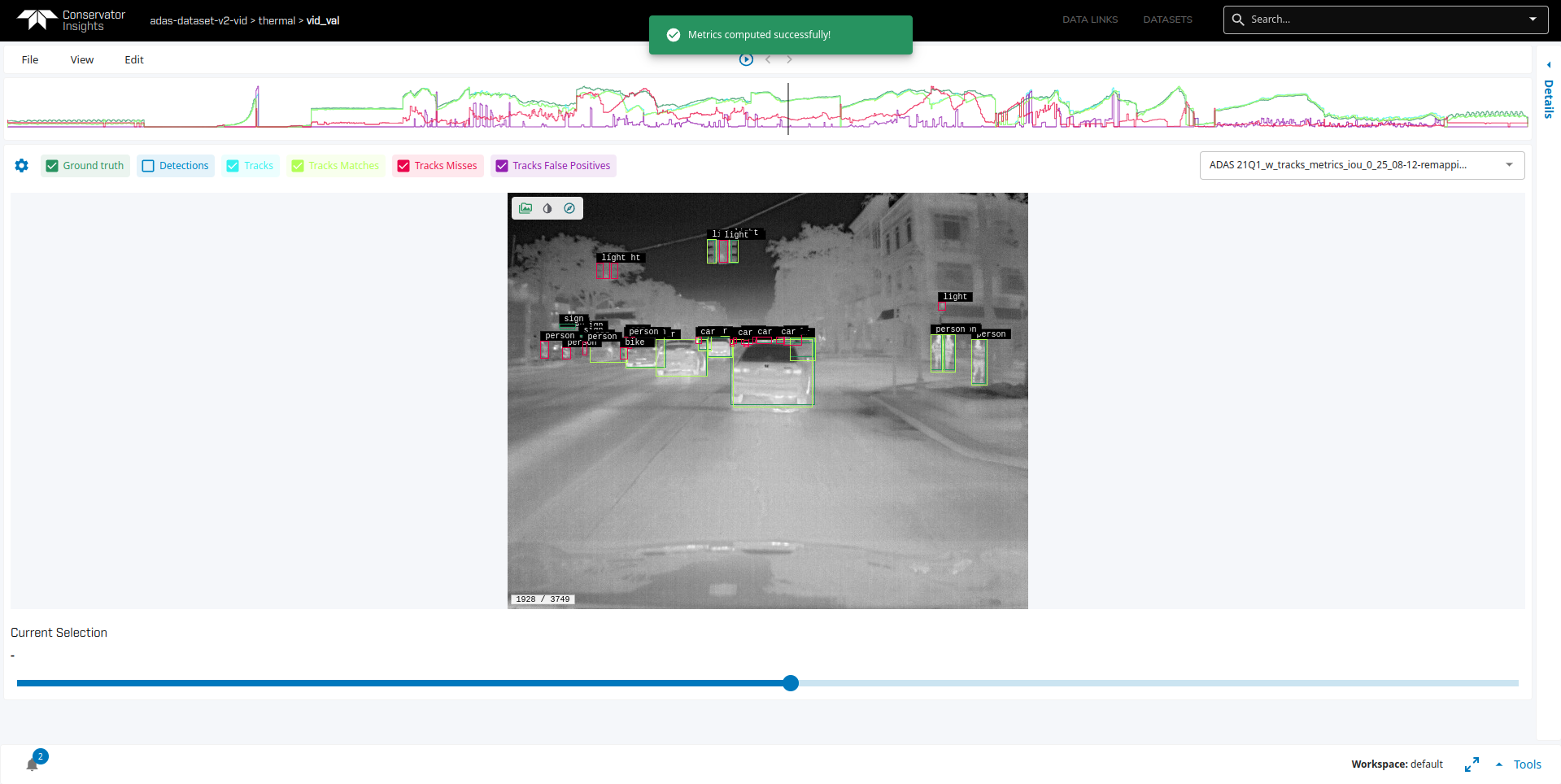

One of VisData most powerful features is the ability to quickly find and visualize detection errors. This allows users to run a frame-by-frame analysis of their model and explore the source of misses and false positives. It is also a great tool for comparing per-class performance between different models or technologies.

Terminology

Intersecton-Over-Union (IOU): IOU quantifies the amount of overlap between two bounding boxes and helps determine the correctness of a detection. It is defined as the ratio between the overlap of two bounding boxes and the area of their union.

IOU Threshhold: User-defined threshhold to help determine whether a detecton is a match, miss, or a false positive.

Match: A detection bounding box that has the correct class label and overlaps a corresponding ground truth bounding box with an IOU above the specified IOU threshhold.

Miss: A ground Truth bounding box that doesn’t have a corresponding detection with an IOU above the specified IOU threshold.

False Positive: Any detection bounding box that is not a match. In case of duplicate detections for same ground truth bounding box, only one will be considered a match and all others will be considered false positives.

Remap and Labels

VisData allows user to customize how metrics are computed with the help of remap and labels JSON files. Remap file defines a mapping from ground truth class labels to a consistent set of labels specified in the labels file. The labels file defines which class labels to compute metrics for and filters out all other labels.

It is common for datasets to have ground truth annotations for various classes that users might not be interested in. For example, the COCO dataset has 80 object categories including car, bus, bicycle, motorcycle, traffic light and fire hydrant. A user might only be inteterested in three classes and trains their model to detect motorized vehicle, bicycle, and traffic light. In this case, the remap file will contain a dictionary mapping bus -> motorized vehicle, motorcycle -> motorized vehicle and car -> motorized vehicle. The labels file will only contain the classes that the user wants to compute metrics for. Any other class labels in the ground truth will be filtered out by the labels file. For the example above, if the user only wanted to compute metrics for the motorized vehicle and bicycle class, the remap and labels file would be defined as follows:

Remap File Example

{

"bus": "motorized vehicle",

"car": "motorized vehicle",

"motorcycle": "motorized vehicle",

}

For more details see the Remap File definition.

Labels File Example

{

"name": "ObjectClass",

"values": [

"motorized vehicle",

"bicycle"

]

}

For more details see the Label Vector definition.

Note

Remap and labels files must follow the file structure above.

VisData can only remap class labels to labels defined in the labels file.

These files must be saved in $WORKSPACE/remaps/ and $WORKSPACE/labels/ directories respectively. These files don’t have any naming restrictions and you may also have multiple labels and remap files in these directories.

Tutorial

Note

This tutorial assumes that you have already setup a workspace and have imported results for a given dataset.

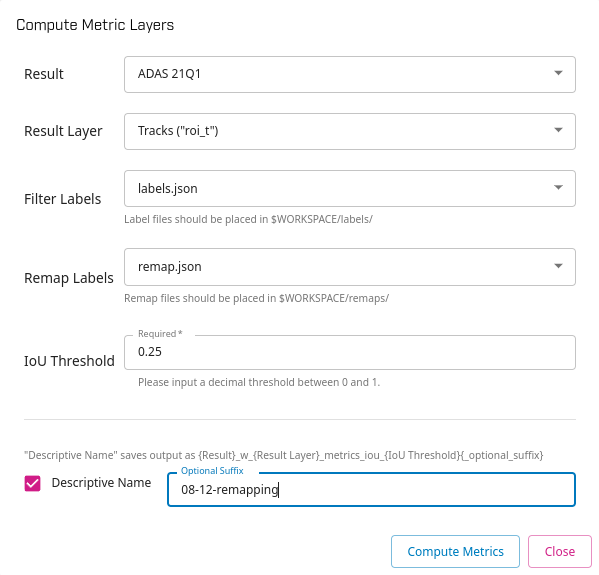

From the Datset View, go to Edit -> Compute Metrics.

Next, fill out the form with desired metrics settings.

Result: Dataset result used to compute metrics. Current result is selected by default.

Result Layer: Layer used to compute metrics from the result chosen above. Ex. detections layer, tracking layer.

Filter Labels: Label file in

$WORKSPACE/labels/used to compute metrics. By default,No Filteringis selected which means VisData doesn’t filter any labels.Remap Labels: Remap file in

$WORKSPACE/remaps/used to compute metrics. By default,No Remappingis selected which means VisData doesn’t remap any labels.IoU Threshold: Intersection-over-Union threshold to help distnguish matches, misses, and false positives. Must be a value between 0 and 1 with common values being 0.25 and 0.5.

Descripive Name: Selecting this option sets the created file name to have the following naming convention:

{Result}_w_{Result Layer}_metrics_iou_{IoU Threshold}{_optional_suffix}where each of the values within the{}are substituted by a formatted version of their corresponding field above.{Result}will be substitued by the _base name_ of the result selected in the Result field. If Descripive Name option is not selected, file name will be the _base name_ of the result selected in the Result field. Checking this option enables the Optional Suffix field below. In either case, if filename already exists, it will be overwritten.Optional Suffix: User-defined suffix to append to createtd result file. Descriptive Name option must be selected.

Note

_base name_ is a formatted version of the value in the Result field. It is the name of the result without the descriptive portion: _w_{Result Layer}_metrics_iou_{IoU Threshold}{_optional_suffix}. For example, given Result Layer is detections, IoU Threshold is 0.25, and Optional Suffix is Test:

Result Name Selected |

Descriptive Name flag enabled? |

Output File Name |

|---|---|---|

YOLOv5 |

yes |

YOLOv5_w_detection_metrics_iou_0_25_Test |

YOLOv5 |

no |

YOLOv5 |

YOLOv7_w_track_metrics_iou_0_35_Example |

yes |

YOLOv7_w_detection_metrics_iou_0_25_Test |

YOLOv7_w_track_metrics_iou_0_35_Example |

no |

YOLOv7 |

Finally, click on Compute Metrics. VisData will automatically switch to the created result file with metrics.